Async Import Integration

This guide will walk you through the steps required as part of your Async Import integration into Orgvue

By integrating your HRIS data into Orgvue you can ensure your Orgvue reports are up to date with the latest data available

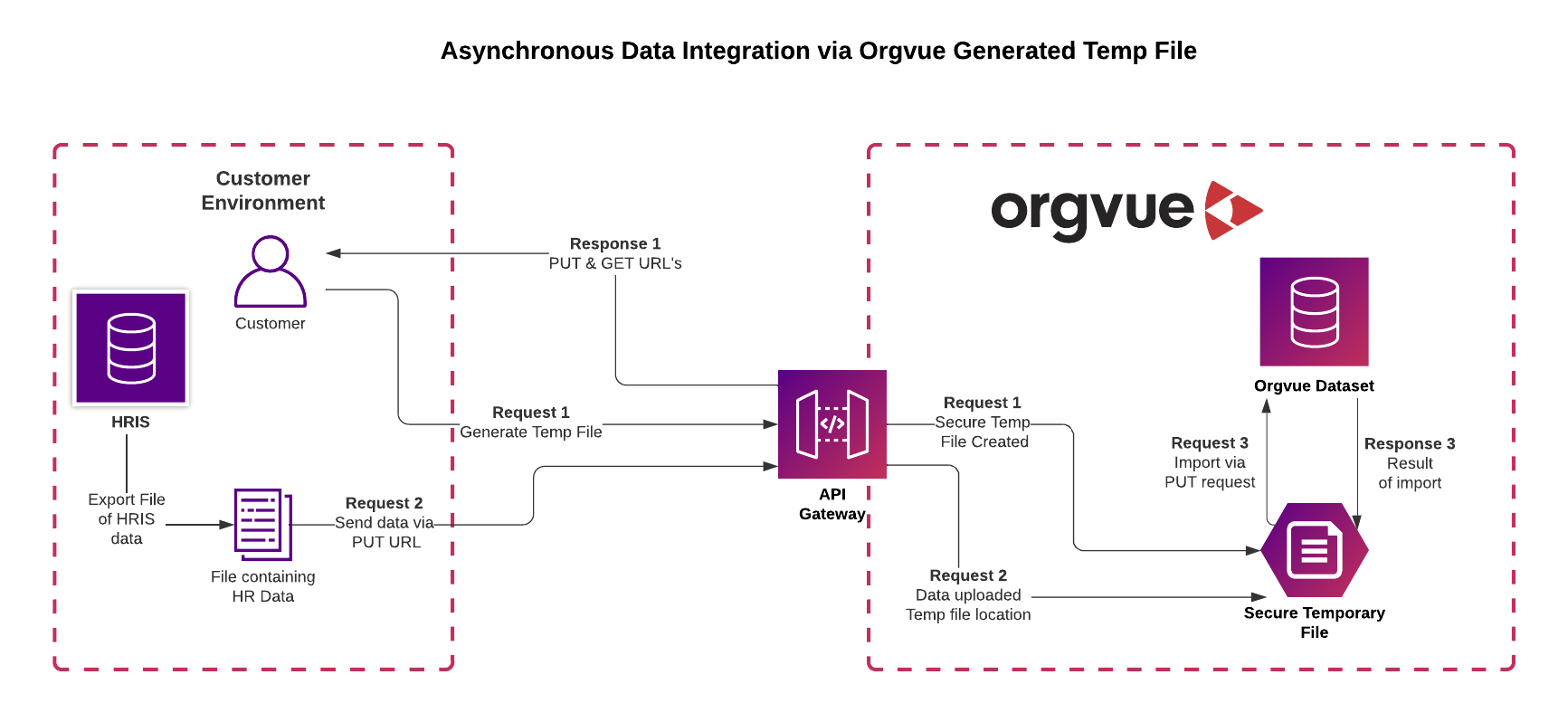

When using the Async import the data to be imported is first uploaded to a temporary file location and Orgvue then imports the data from this location

Orgvue will generate a location in your tenant where you can send the data to

Flow Diagram

Prerequisites to integrating

- Dataset created in Orgvue

- All properties pre-configured in the dataset

- You will also need access to an Orgvue tenant and the permission to use tokens for that API user (See User Management in Settings for more information)

Recipe for Importing data into Orgvue

To synchronize data from your HRIS into Orgvue using the Asynchronous import you will require the

POST: Generate a secure file with temporary access API and the

PUT: Create, update or delete items from a secure temporary file API

You can find more advanced details and options in our API documentation

1. Generate an API Token

Orgvue API requires a JWT in order for you to authenticate into Orgvue which can be obtained from the Tokens page. Token must be captured at time of creation and cannot be re-surfaced in the interface at a later date

2. Obtain your Base URL, Tenant ID, & Dataset ID

In order to integrate into Orgvue you will require the Base URL along with your Tenant ID and Dataset ID that can be found at the following addresses

- Base URL

- via tenant listing page

- Dataset ID via Dataset Metadata Page or the "List datasets" endpoint

3. HTTP Header

Set the Content-Type header: application/json; charset=UTF-8

4. HTTP Header

Set the Authorization header, passing the API Token generated in step 1: Bearer {APIToken}

5. Generate a Secure File with Temporary Access

When using the Orgvue temporary file location it needs to be generated to obtain GET and PUT URLs to read from and write to the location

Data written to the location is securely encrypted at rest, and will be automatically deleted after a set period

POST https://{baseUrl}/api/v1/{tenantId}/files/generate to generate the secure file

The JSON Payload for the request to generate the file has the following format

{

"targetType": "json",

"targetEncoding": "identity"

}

If the imported file is CSV format then the JSON Payload for the request to generate the file would be

{

"targetType": "csv",

"targetEncoding": "identity"

}

The response to either request will be a pair of URLs for use with the PUT and GET command

{

"get": "https://...",

"put": "https://..."

}

For more detail on working with temporary files see Security and working with temporary files

6. Upload Data to the PUT URL

Upload data to the PUT URL from step 5 via

PUT https://generated temp file url

The JSON payload for the upload is the same as the "Async import" endpoint and has the following format

[

{

"_action": "create",

"employeeID": "1453878",

"fullName": "John Smith",

"positionTitle": "Software Engineer",

"grade": "G3",

"salary": 45000,

"dob": 1981-05-15,

"managerID": "5871235"

},

{

"_action": "update",

"employeeID": "3101881",

"salary": 65000

},

{

"_action": "delete",

"employeeID": "6758274",

}

]

If uploading the data from as CSV file the payload would be in the following format

_action,employeeID,fullName,positionTitle,grade,salary,dob,managerID,location

create,1453878,John Smith,Software Engineer,G3,65000,1981-05-15,5871235,London

create,1453974,Mary Jones,Consultant,H7,67500,1997-06-01,4451276,New York

create,2178493,Rex James,Graduate Engineer,C2,37500,2002-08-10,5871235,Sydney

update,3101881,,Senior Engineer,H7,75000,,,

update,3758954,,,,,,5542698,

delete,6758274,,,,,,,

7. Import data from Temporary file to Dataset

Import data via https://{baseUrl}/api/v1/{tenantId}/datasets/{datasetId}/items/import

Specify the import location using the GET URL from step 5

If required, specify an alternative Result Location URL for the result status to be posted to

If no location is specified, the result will be posted using the original PUT URL

Defining an optional Callback URL in the payload will enable the API to notify you when processing is complete

The JSON payload for the import has the following format

{

"importLocation": {

"method": "GET",

"url": "https://example.com"

},

"resultLocation": {

"method": "PUT",

"url": "https://example.com"

},

"callback": {

"method": "GET",

"urlTemplate": "https://example.com?status={{jobStatus}}&traceId={{traceId}}&tenantId={{tenantId}}"

}

}

See API documentation for further details on Query Parameters

Async Import Postman Collection

We also provide a Postman collection for Async Import.

You will need to setup the postman collection's variables tab before before any of the requests can be run:

- set the bearerToken variable to your generated API token

- set baseUrl to the base URL on which you created your API token e.g. https://orgvue.eu-west-1.concentra.io

- set tenantId to the name of your tenant

- set datasetId to the unique dataset id of your dataset

You can now run the first request 'Generate temporary file'. This will create a temporary file and provide you with two links:

- a PUT link, which you will use to upload the item updates you wish to import

- a GET link, which you will use to import these item updates to the dataset

After running this request, you will need to copy and paste the generated PUT link to the URL section of the second request 'Upload data to temporary file'. The body of this request should contain the item updates you wish to upload.

Once the second request has been sent, you will need to copy and paste the generated GET link to the 'url' field of the 'importLocation' object in the request body of the third request 'Import data into Orgvue'. Once this final request is sent, your item updates will have been imported into your Orgvue dataset.